I just happened to be holding a theater-style box of candy last week when I began to read an article about how Google search ranking works. The more I read, the deeper I was drawn into the story as if it was a movie on the big screen and I was fully engaged, while chomping on the candy. The publisher Search Engine Land estimated a reading time of 31 minutes for this big boy. I know I spent more than that, allowing for at least a few minutes to take in the fabulous accompanying graphic.

Are you familiar with the iconic Schoolhouse Rock video “I’m Just a Bill” about how a bill becomes a law? This article reminds me of that, except that it’s about how a new document works its way through Google’s multiple systems (e.g., Trawler, Twiddler, NavBoost, Tangram etcetera etcetera) on its way to becoming a search ranking.

A must-read by Mario Fischer, the piece draws on his analysis of what can be learned from the massive leak of Google documents in May and what was disclosed in the U.S. Department of Justice release of trial exhibits last November.

“Anyone who is even marginally involved with ranking should incorporate these findings into their own mindset,” Fischer writes. “You will see your websites from a completely different point of view and incorporate additional metrics into your analyses, planning and decisions.”

“You will see your websites from a completely different point of view and incorporate additional metrics into your analyses, planning and decisions.”

Mario Fischer,

Website Boosting

Which is not to suggest that all of the mysteries of the Google universe are now known. “This article is not intended to be exhaustive or strictly accurate,” Fischer says with no hint of irony. It just is what it is, and I recommend the piece in full.

Because Fischer’s narrative is far too involved to attempt to summarize here, below I’ll call your attention to a few random insights shared. Remember that I read with asset manager and wealth management website content in mind, with some familiarity with their visibility challenges including in Google rankings.

Categories

What the human quality raters do

The several thousand humans—the civilian crew largely responsible for E-E-A-T (Experience, Expertise, Authoritativeness and Trustworthiness) ratings—have been a source of endless fascination to me. EEAT ratings are especially germane to investment sites as part of the Your Money or Your Life topics that Google holds to a higher quality standard. As opposed to being something onerous, EEAT represents opportunity for investment firms especially—if deliberate steps are taken.

What are the humans who influence the rankings looking for? What does it take to impress them? How do they even contribute to what is a famously computer-driven operation?

Here’s the skinny from Fischer:

- Raters receive URLs or search phrases (search results) and are asked to answer predetermined questions, typically assessed on mobile devices. Take note: How do your Insights pages, in particular, look on mobile? Do they fully represent the authority of your site or have you made some design concessions that have resulted in a substandard display?

- Is it clear who wrote this content and when? Does the author have professional expertise on this topic? The raters’ Yes or No answers are then used to train machine learning algorithms—which analyze the characteristics of the good and trustworthy pages versus the less reliable (or maybe computer-generated). The humans don’t create the criteria for ranking, Google’s algorithms use deep learning to identify patterns based on the training provided by the evaluators.

In the example Fischer provides, the humans could intuitively rate a piece of content as trustworthy if it includes an author’s picture, full name, and a link to a LinkedIn profile and that would result in a ranking boost. Pages lacking these features could be perceived as less trustworthy, resulting in a devaluing of the page.

- Raters’ evaluations are compiled into an “information satisfaction” score. Such scores are produced for a small percentage of URLs. For other pages they’re extrapolated for ranking purposes.

Whether it’s for a human rater visiting your new content (highly unlikely, statistically speaking) or an algorithm ranking your page based on the humans’ work, encourage your contributors to explicitly assert their subject matter experience and expertise. Attention to this could be to your firm’s significant advantage (see sidebar).

Apart from what we’re learning about the EAAT process, there’s much more online commentary lately about Google’s expectation and measure of information gain—that a piece of content should provide new, useful and relevant information that adds to knowledge a user can find elsewhere. Fischer’s commentary also confirms the devaluating of documents judged to have similar content.

Information gain guidelines require content to offer something fresh and insightful, EEAT says the content needs to be provided by sources judged to be trustworthy. The combination of strong information gain and high EEAT is essential in investment and other Your Money Your Life sites.

Dates can make all the difference

It breaks my heart to see firms publish timely content without a date. Date resistors have a rationale—let’s not date it, we intend for it to be evergreen, etc.—that is in direct opposition to what’s in the page and site’s best interests. The inclusion of a date gives your new content a fighting chance with Google as it’s being evaluated for the first time.

Google can extract the data from the URL and/or the title or take it from the page, Fischer says. However, from other sources we know that structured data leads to faster crawling and supports Google’s goal to allocate resources more efficiently.

My advice: make sure the date is included in the source code. If you use WordPress, the base template likely has you covered, but it may be an explicit step you need to take with a different CMS. Not sure? Look for datePublished or dateModified or even bylineDate in your source code.

Also, don’t pay fast and loose with the dates either. According to Fischer, if you see an old date on your content and decide to update the date without updating the copy, Google will notice and that could result in a downranking (demotion). Google stores the last 20 versions of a document, fyi.

Stay on topic

With every piece of content you publish, you’re either confirming or confusing Google’s understanding of your site and what it’s authoritative on. If you’re an investment firm and you’re unfortunate enough to have to deal with a thought leader who every once in a while likes to blog about fine cars, be aware that there are consequences. It’s not just a case of that post not performing well (and it won’t), but that indulgence could hurt others.

Fisher says Google uses something called deltaPageQuality to measure the qualitative difference between individual documents in a domain or cluster.

The initial ranking

How much attention do you pay to the ranking of a new piece of content straight out of the gate?

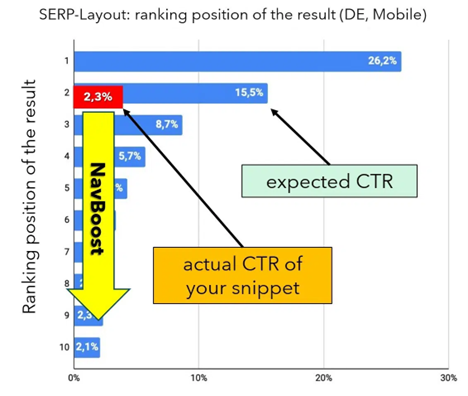

Citing a graphic by Johannes Beus presented at this year’s CAMPIXX in Berlin as shown below, Fischer explains that Google has expectations of performance by ranking position, and that adjustments are made when a document over- or underperforms.

The #2 position should get a clickthrough of 15.5%, for example. If it generates significantly more or less, it could be moved up or down as needed.

Figure 6: If the “expected_CTR deviates significantly from the actual value, the rankings are adjusted

accordingly. (Datasource: J. Beus, SISTRIX, with editorial overlays)

When it comes to new, unknown pages, Google relies on extensive data from a page’s environment to estimate signals. “It’s probable that values from neighboring pages are also used for other critical signals, helping new pages climb the rankings despite lacking significant traffic or backlinks,” Fisher writes.

But then it’s game on.

If a document for a search query newly appears in the top 10 and the clickthrough (CTR) falls significantly short of expectations, expect a drop in ranking within a few days (depending on the search volume).

“You only have a short time to react and adjust the snippet if the CTR is poor (usually by optimizing the title and description) so that more clicks are collected,” Fischer writes. “Otherwise, the position deteriorates and is subsequently not so easy to regain. Tests are thought to be behind this phenomenon. If a document proves itself, it can stay. If searchers don’t like it, it disappears again.”

Click metrics for documents are stored and evaluated over a period of 13 months (one month overlap in the year for comparisons with the previous year), according to Fischer’s report.

Be the final destination

“The longer visitors stay on your site, the better the signals your domain sends, which benefits all of your subpages. Aim to be the final destination by providing all the information they need so visitors won’t have to search elsewhere,” writes Fischer. Google records the search result that had the last “good” click in a search session as the lastLongestClick.

In fact, this is the thinking of our recent post You need to rank #1 for your product tickers. The wealth of information on mutual fund and ETF profiles likely means that most ticker searchers won’t need to go anywhere else if they land on your site first. Your ranking in the top spot for all products will boost the site overall. Again, I’d make that a priority.

In the same spirit, pages with a low number of visits or those that don’t achieve a good ranking over time should be removed, Fischer says.

No more with the Learn mores

There are too many Learn more, Read now and otherwise generic call to action buttons on investment sites not to pass on this comment from Fischer: “The text before and after a link, not just the anchor text itself,” he writes, “are considered for ranking. Make sure the text naturally flows around the link. Avoid using generic phrases like ‘click here,’ which has been ineffective for over twenty years.”

So, those were some of my quick takeaways from one of the best long-form posts I’ve read year to date. Get yourself a big ole box of candy and see what strikes you.

Ready to talk about your firm’s digital strategy? Send us an email.

An E-E-A-T Content Quality Checklist

Investment marketers don’t need to master the behind-the-scenes detail of how Google works to intuitively understand the E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) content quality guidelines that influence search rankings.

Just consider the thought leadership success of First Trust’s Brian Wesbury or Bill Gross, especially when he was at PIMCO. Both have distinctive personalities and experience that inform their writing and make it memorable, not to mention quotable (driving backlinks). And, there are a few other such personalities whose individualized perspectives elevate the entire website they contribute to.

Here’s a sampling of content, quality and expertise questions as provided by Google. It proves the point that can’t be said enough—what’s good for Google is also what’s good for readers. Write in an authoritative and engaging way, and rankings should follow.

- Does the content provide original information, reporting, research, or analysis?

- Does the content provide a substantial, complete, or comprehensive description of the topic?

- Does the content provide insightful analysis or interesting information that is beyond the obvious?

- If the content draws on other sources, does it avoid simply copying or rewriting those sources, and instead provide substantial additional value and originality?

- Does the main heading or page title provide a descriptive, helpful summary of the content?

- Is this the sort of page you’d want to bookmark, share with a friend, or recommend?

- Would you expect to see this content in or referenced by a printed magazine, encyclopedia, or book?

- Does the content provide substantial value when compared to other pages in search results?

- Does the content present information in a way that makes you want to trust it, such as clear sourcing, evidence of the expertise involved, background about the author or the site that publishes it, such as through links to an author page or a site’s About page?

- If someone researched the site producing the content, would they come away with an impression that it is well-trusted or widely-recognized as an authority on its topic?

- Is this content written or reviewed by an expert or enthusiast who demonstrably knows the topic well?

- Are you mainly summarizing what others have to say without adding much value?

- Does your content leave readers feeling like they need to search again to get better information from other sources?

- Are you changing the date of pages to make them seem fresh when the content has not substantially changed?

- Is it self-evident to your visitors who authored your content?

- Do pages carry a byline, where one might be expected?

- Do bylines lead to further information about the author or authors involved, giving background about them and the areas they write about?